I’m excited to tell you about Meta’s Llama 3.1, a powerful AI language model you can use for free. In this article, I’ll show you how to install and run Llama 3.1 on your computer. This means you can use it without the internet and save money on AI costs.

How to Run Meta Llama 3.1 Locally

I’m excited to tell you about Meta’s Llama 3.1, a powerful AI language model you can use for free. In this article, I’ll show you how to install and run Llama 3.1 on your computer. This means you can use it without the internet and save money on AI costs.

What is Llama 3.1?

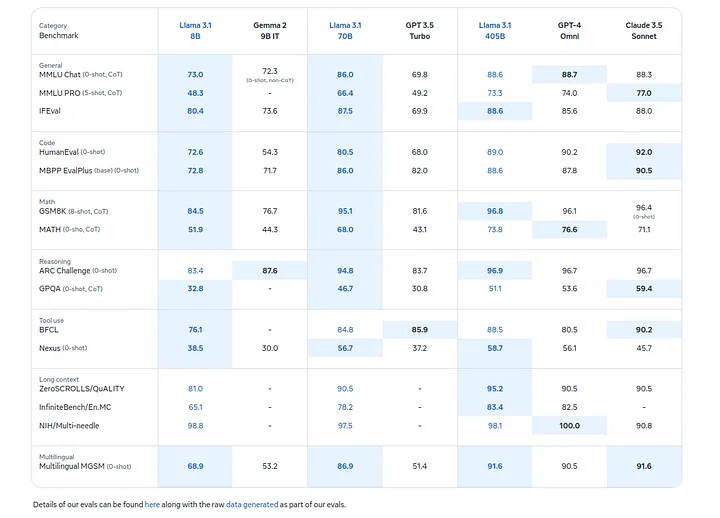

Llama 3.1 is Meta’s newest AI model that’s open for anyone to use. It comes in three sizes:

- A huge 405 billion parameter version

- A medium 70 billion parameter version

- A smaller 8 billion parameter version

Llama 3.1 is special because it works really well. It can even do better than some private AI models.

You can read more about it here: https://llama.meta.com/

Why Llama 3.1 is Great

- It’s free.

- Anyone can change and improve it.

- It works as well as expensive private models.

- It understands many languages.

- It can handle long texts. It can work with up to 128,000 words at once, which is great for long writing tasks.

Challenges with Llama 3.1

- It needs a lot of computer power: The biggest version needs very powerful computers to run.

- Might have biases: Like all AI, it might repeat unfair ideas from its training data.

- Possible safety issues: Because anyone can see how it works, there might be some safety risks if not used carefully.

Where to Try Llama 3.1

Here are some ways you can try out Llama 3.1 for free:

- Meta.ai Chatbot: Meta’s own platform offers access to Llama 3.1, including the 405B model, along with image generation capabilities. However, this is currently limited to U.S. users with a Facebook or Instagram account.

- Perplexity: This AI-powered search tool offers access to Llama 3.1 405B, but it requires a paid subscription.

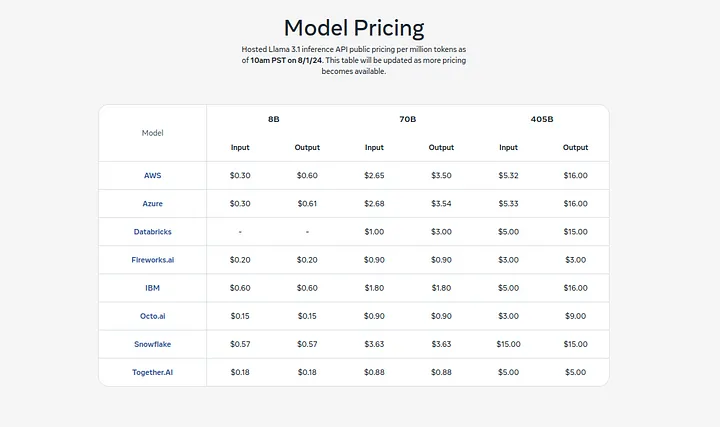

- Cloud Platforms: Various cloud providers offer access to - Llama 3.1, including:

- Amazon Bedrock

- Microsoft Azure AI

- Cloudflare Workers AI

- Snowflake

- DataBricks

- Nvidia AI Foundry

- IBM Cloud

In this tutorial, I’ll show you how to use Llama 3.1 for free on your own machine.

How to Run Llama 3.1 on Your Computer

The big 405 billion version needs a very powerful computer. But I’ll show you how to run the 8 billion version. Here’s what you need:

- 16 GB of RAM

- 8-core CPU

- 20 GB of free space

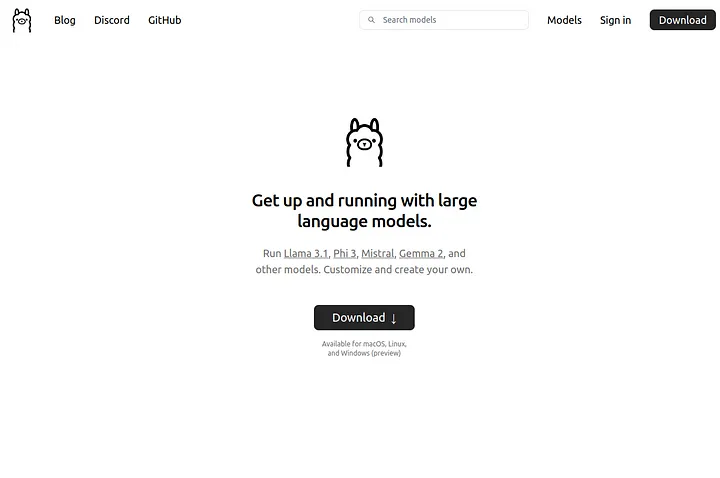

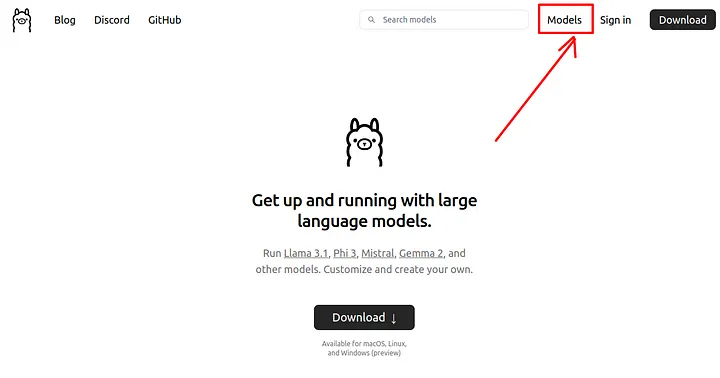

Step 1. Download and Install the Ollama Tool

Go to https://ollama.com/ and download the tool for your operating system

For Linux, I’ll use this command:

curl -fsSL https://ollama.com/install.sh | sh

The command will install Ollama, which is a tool for running and managing large language models (LLMs) locally on your machine.

Step 2. Download the Llama 3.1 Model

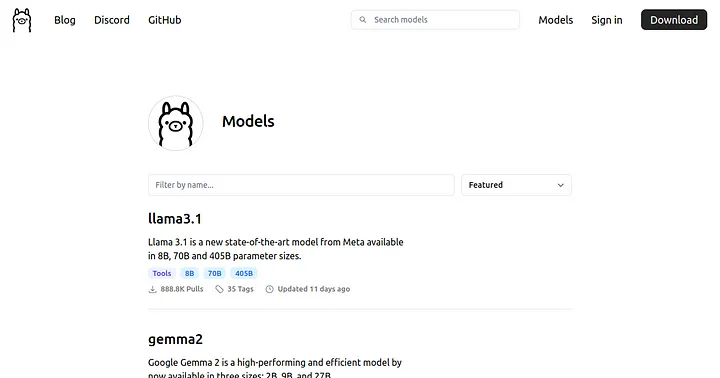

On the same website, click on the link “Models” in the top right corner.

Then, find Llama 3.1 in the list.

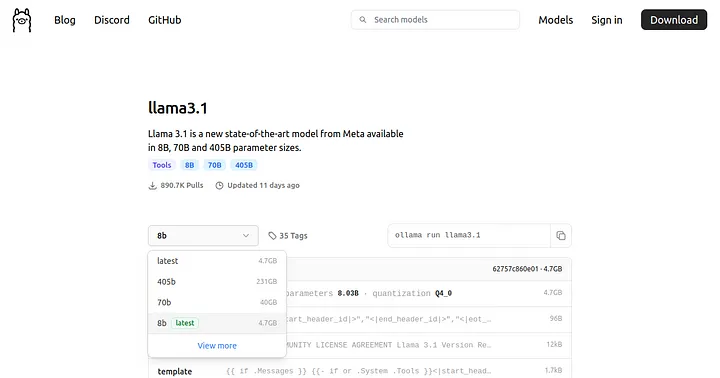

Click on the link and then select the desired model from the drop-down menu.

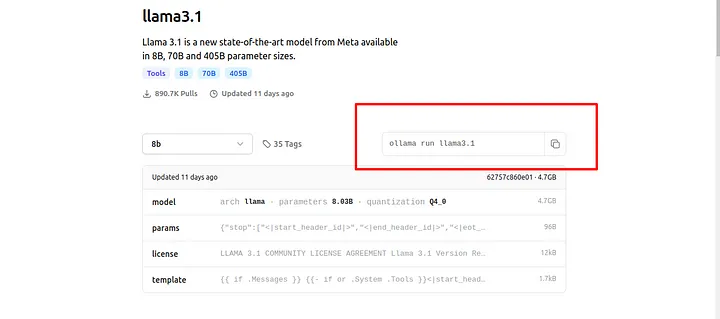

Copy the command and paste it into your terminal.

Terminal command:

ollama run llama3.1

Wait for it to download (it might take 30 minutes or more). If everything is okay, you can use LLM inside your terminal. Enjoy! :)

Using Llama 3.1 Without Internet

One cool thing about having Llama 3.1 on your computer is you can use it without the internet. This is great if you need to keep your data private.

Things to Remember

There are a few downsides to know about:

- The 8 billion model only knows things up to 2021

- It can’t look at files on your computer or websites

- It might be slower than online AI services

How to Give Llama 3.1 Information

The model can’t read your files or websites, but you can:

- Copy and paste text into it

- Use things like Dropbox to share files

- Summarize what you want it to know in your question

Wrapping Up

Running Llama 3.1 on your own computer is a great way to use powerful AI without the internet or ongoing costs. It has some limits, but it’s still amazing to have such a smart AI tool on your own machine.

If you like this tutorial, please click like and share. You can follow me on YouTube, join my Telegram, or support me on Patreon.

Cheers!